Politicians Discover AI : Immediately Try to Ban It

In a plot twist that surprised absolutely no one, global lawmakers have finally discovered artificial intelligence. After months of asking their aides what the ChatGPT thing was, politicians from Washington to Brussels to New Delhi are now promising to regulate AI with the same enthusiasm they once reserved for banning TikTok and vaping.

According to Bloomberg, AI was the most-mentioned topic in global parliaments in 2025, second only to the economy and whether lunch counts as a deductible expense. The BBC reported that more than 60 new bills addressing AI ethics, security, or existential risk are currently being debated. None of them agree on what AI actually is.

The political world has entered what The Guardian calls the age of algorithmic panic, a time when legislators believe that the same people who cannot fix potholes can somehow align machine intelligence with human values.

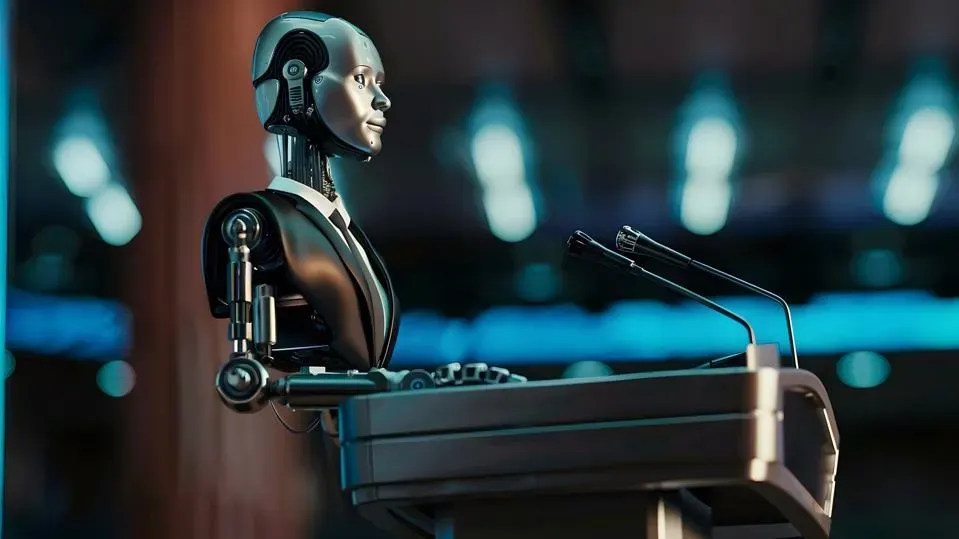

AI has passed the Turing test. Now it faces something far more terrifying: the House Committee on Technology Oversight.

The Hearing of the Century

The first major AI hearing in Washington looked like a tech demo in a nursing home. Senators squinted at charts, staffers whispered definitions, and someone accidentally tried to plug a USB cable into a paper folder.

Reuters reported that the session included a live demonstration of an AI chatbot answering questions about inflation, climate policy, and tax reform. The bot performed flawlessly until one senator asked, “Can you run for president?” and another shouted, “Not on my watch.”

The Wall Street Journal noted that at least three lawmakers confused open source with open borders. One asked whether the AI could be contained in a firewall big enough to cover the Midwest. Another suggested putting AI on a blockchain, an idea now under review by the Committee on Ideas That Sound Technical.

Meanwhile, the European Parliament held its own debate, where the average speech mentioned ethics nine times and understanding zero times. The EU’s new Artificial Intelligence Act classifies technologies into risk tiers: low, medium, high, and existential, roughly mirroring the public’s trust in their elected officials.

Across the Channel, the UK launched its AI Safety Summit in a converted castle, complete with a photo op of global leaders looking deeply concerned while standing next to a robot that had been turned off for safety reasons. The Guardian described it as a conference about the future of intelligence attended exclusively by people whose phones are still on silent mode from 2014.

The Regulatory Gold Rush

Once politicians realized they could not code, they discovered something better. They could regulate.

Bloomberg Intelligence estimates that by 2026, global spending on AI compliance and governance could exceed 30 billion dollars. Lawmakers everywhere are drafting frameworks, guidelines, and acronyms faster than the technology itself evolves.

The United States has proposed the Algorithmic Accountability and Transparency Act, which requires companies to explain AI decisions in plain language. Early tests show this is impossible, as even AI researchers struggle to explain why their models sometimes confuse hot dogs with Labradors.

In France, the Digital Affairs Ministry launched Le Code d’Intelligence, a 214-page document outlining ethical obligations for AI developers. It includes recommendations for fairness, transparency, and according to one confused translator, emotional restraint.

India’s government, not to be outdone, created a national task force to study AI alignment with Indian values. The committee’s first meeting was postponed because the chatbot scheduled to take minutes began generating Bollywood plot summaries.

Meanwhile, lobbyists are having the time of their lives. Reuters estimates that more than 400 lobbying firms are now representing AI interests, ranging from self-driving car manufacturers to digital parrot startups. Every industry wants its algorithm exempted from regulation, claiming it is for the greater good or still in beta.

The Wall Street Journal reported that one U.S. senator recently proposed taxing AI-generated content as intellectual property, while another suggested giving AI systems voting rights once they prove fiscal responsibility.

In short, the race to regulate AI is less about understanding it and more about monetizing confusion.

Fear, Fiction, and Free Lunches

Politicians love two things: a good crisis and a free buffet. AI offers both.

The BBC reported that parliamentary cafeterias are now serving AI-themed menus during tech hearings. Items include Neural Network Nachos and Synthetic Salmon. The symbolism writes itself.

For politicians, AI has become the perfect talking point. It is futuristic, frightening, and impossible to define. The same leaders who once warned about Y2K are now warning about AGI, often with the same PowerPoint slides, only updated with stock images of robots shaking hands.

The Guardian captured the absurdity in a headline that read, “World leaders vow to regulate AI before understanding it.” It was not satire.

Bloomberg economists predict that AI could add 15 trillion dollars to global GDP by 2030, yet politicians are focusing on robot sentience risk rather than the more pressing issue of how AI will impact employment, taxation, and inequality. One EU delegate was overheard saying, “We should ban it until we figure out how to profit from it.”

Across the Atlantic, the U.S. Congress is split between two factions: the AI Optimists, who believe the technology will unlock innovation, and the AI Alarmists, who believe it will unlock the apocalypse. The third group, known as The Confused, believes AI already runs the Wi-Fi in their offices.

The Wall Street Journal quoted a Silicon Valley executive describing this phase as regulation by paranoia, where policy is written like science fiction fan fiction. Committees now include experts in law, philosophy, and general human feelings.

At the United Nations, delegates proposed an AI Treaty to ensure global cooperation, which promptly fell apart when three countries demanded veto power over machine learning itself.

Meanwhile, the AI industry watches with quiet amusement. One CEO told Reuters, “We are witnessing humanity’s greatest minds argue about what an algorithm thinks of them.”

Conclusion

Artificial intelligence has achieved what nothing else could: bipartisan agreement that something must be done, preferably before lunch.

Politicians, after decades of ignoring science, are suddenly fascinated by it, mainly because it polls well. They fear AI could replace them, though critics argue machines would need much lower intelligence for that to happen.

As The Guardian quipped, AI may never achieve consciousness, but it has already achieved political panic.

Bloomberg reports that AI regulation is now one of the fastest-growing sectors in law and lobbying. For an industry that does not yet understand its own output, that is impressive.

Perhaps the real danger is not that AI becomes too smart. It is that it starts watching the hearings and decides humans are not worth the data storage.

Until then, expect more committees, more panic, and more headlines about saving humanity from its own spreadsheets. The machines may be learning, but our politicians are still buffering.

Recent Comments